Blog

AWS Well-Architected Framework: The Security Pillar

This article is part of an ongoing series taking a deep look at Amazon's Well-Architected Framework, which is an incredible collection of best practices for cloud-native organizations. This month, we're digging into the Security Pillar.

At the 2018 San Francisco AWS Summit, Dr. Werner Vogels, AWS Chief Technology Officer, took the stage to introduce new products and services. Vogels spent nearly an hour on the topic of security, making several bold statements about the industry's security posture:

"It's our responsibility to protect our customers and their businesses…. What scares me the most is how complacent we've been about all the recent data breaches. It's not acceptable."

Vogels impressed upon attendees that security should be a priority for everyone, and that it should be baked in and automated from the very beginning – not an afterthought. AWS has codified many best practices and guidelines for architecting and operating secure systems in its Security Pillar Whitepaper for the Well-Architected Framework, which introduces itself thusly:

The security pillar encompasses the ability to protect information, systems, and assets while delivering business value through risk assessments and mitigation strategies. This paper will provide in-depth, best-practice guidance for architecting secure systems on AWS.

Organizations that are committed to security will have a pervasive, end-to-end approach to security, with a culture that appreciates the importance of protecting their systems and customer data, and investments that back up their commitment. What does this look like in practice?

- Security will be a consideration from the inception of a product or service, with security experts and team members involved from the beginning.

- Regularly scheduled penetration tests and audits will be viewed as an opportunity to learn, improve, and better protect customers, not as a hassle or obligation that must be met.

- Identity and authorization will be approached strategically, not haphazardly, and will be guided by the principle of least privilege.

- Security will be considered not only at the "outer" layer, but across the entire stack.

- Team members will be ready to handle security events, and armed with data, processes, and procedures that ensure a rapid and effective response.

Let's dive into the Security Pillar of the Well-Architected Framework to learn more about the best practices contained within.

If you're interested in learning about the birth of the AWS Well-Architected Framework, then check out our initial post, "Introducing the AWS Well-Architected Program."

Design Principles

Amazon outlines six design principles for security in the cloud:

- Implement a strong identity foundation

- Enable traceability

- Apply security at all layers

- Automate security best practices

- Protect data in transit and at rest

- Prepare for security events

Implement a Strong Identity Foundation

Controlling access to your resources in AWS can be challenging. AWS provides deep, granular, and powerful access controls through its Identity and Access Management (IAM) service. Your organization should leverage IAM to implement the principle of least privilege, which Wikipedia defines as:

A principle which requires that in a particular abstraction layer of a computing environment, every module (such as a process, a user, or a program, depending on the subject) must be able to access only the information and resources that are necessary for its legitimate purpose.

In short, the default behavior should be to deny access to data and resources, with access being granted only to users and systems that require it to do their job. In addition, access should only be granted for the duration of time that is required, with a focus on reducing the reliance on long-term credentials.

Enable Traceability

In the event of a security incident, you don't want to be left scrambling to find the information you need to react and remediate. Enabling traceability requires you to apply monitoring, alerting, and auditing for every action and change in your environment, in as close to real time as possible. AWS provides a number of tools and services to enable traceability, and organizations that make security a priority will ensure that they integrate logs and metrics into those systems. But, traceability isn't just about enabling operators to respond to security events, it's also about enabling systems to respond via automation.

Apply Security at all Layers

Too frequently, security is treated as a priority only at the outer layer of an application. The reality is that such an approach leaves you vulnerable to much more extensive consequences in the event of a breach. By applying security controls at every layer (application, edge, load balancer, instance, OS, and application), you limit the "blast radius" in the event that an attacker penetrates the outer layer of protection.

At Mission, we manage hundreds of workloads for our customers, and our experience has consistently shown that applying security at all layers significantly reduces risk, and provides confidence to our customers that their infrastructure is safe.

Automate Security Best Practices

Having a workforce that is educated about security and cares about its importance will greatly aid in your ability to protect your applications but, as much as we like to deny it, we humans are prone to error and forgetfulness. Many security best practices can be applied in software, leveraging configuration management tools and infrastructure-as-code templates that codify your best practices to ensure that you never miss a step.

Mission uses Chef and Terraform for our configuration management and infrastructure-as-code platforms. We maintain a tested library of base recipes and templates that we use to consistency apply best practices across all workloads that we manage. Not only does this ensure that we are consistent, it enables us to roll out new security protections en-masse as we evolve.

Protect Data in Transit and at Rest

The data that an application manages isn't usually uniform. It has varying levels of sensitivity, and thus shouldn't be treated in a uniform way. AWS recommends segmenting your data by sensitivity level, so that you can apply stricter controls to more sensitive data. AWS, along with application programming environments, provide mechanisms for protecting data in transit and at rest, including encryption and tokenization. In addition, consider reducing or eliminating direct human access to data to reduce your risk – instead only providing access via systems with more built-in access controls.

Prepare for Security Events

No matter how well you apply the above design principles, it is likely that you will encounter a security event. Protect yourself by preparing for the eventuality of an incident by creating well-documented, frequently rehearsed incident management processes and procedures. Get your teams together to practice applying the procedures in simulations, and automatically trigger the process using automated detection when possible.

Using these design principles, you can help drive change within your organization to ensure that security is a priority, not an afterthought.

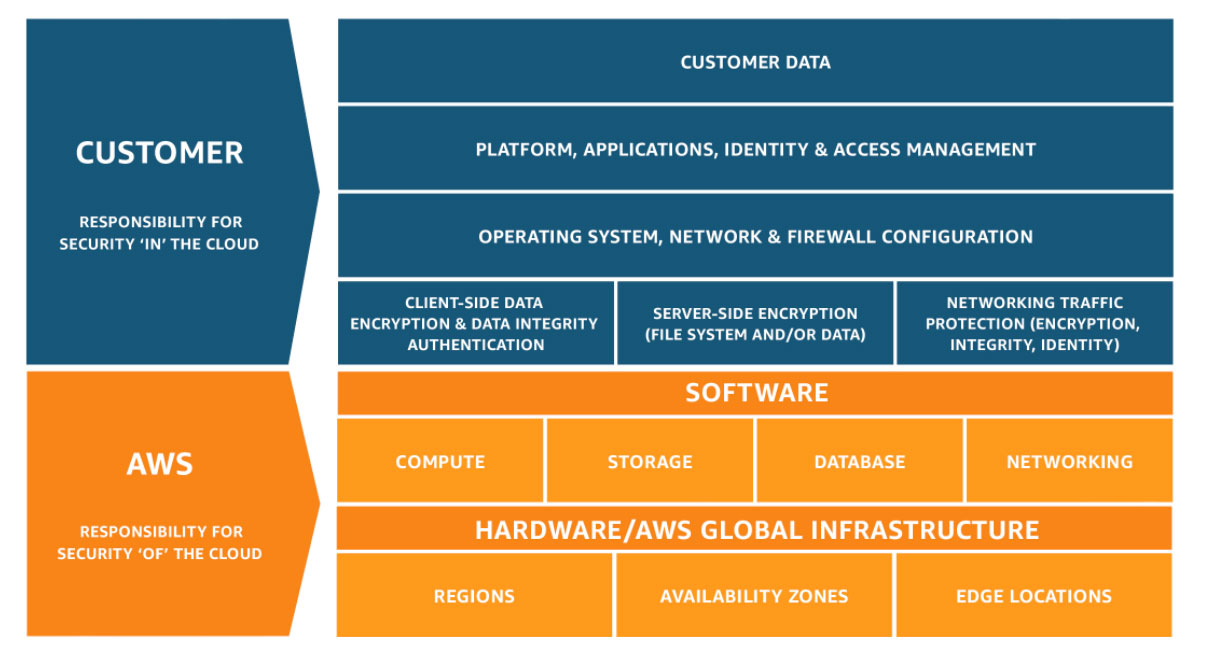

AWS Shared Responsibility Model

Any conversation about security in AWS should include an overview of the AWS Shared Responsibility Model, which outlines AWS' bifurcation of security responsibilities between itself and its customers. In the AWS Shared Responsibility Model, AWS is responsible for the security of the cloud, and the customer is responsible for security in the cloud. That means that AWS customers don't need to worry about the security of data centers, the physical network, or underlying virtualization or application software that underpins AWS' services. Instead, customers must focus on their own data, applications, identity and access management, etc.

Areas of Focus for Security

Amazon describes security in the cloud through five key areas of concern:

- Identity and access management

- Detective controls

- Infrastructure protection

- Data protection

- Incident response

Let's dig into these areas.

Identity and Access Management

Perhaps the most foundational element of security, identity and access management ensures that your resources are only accessed by properly authorized and authenticated clients for valid reasons. Identify and access management are primarily concerned with the definition of your "principals" (users, groups, services, and roles that take actions in your account), policies, and their management.

Best practices for identity and access management fall into two main topics: protecting AWS credentials and fine-grained authorization.

Protecting AWS Credentials

Credential protection is an important part of security, regardless of the context. With AWS, each and every API call and touch point with the service will be authenticated, making it even more critical that your credentials are handled with care. Credential management should be intentional, and your organization should define practices and patterns to help you stay secure.

Every AWS account has a single "root" user, which is the first identity that is created upon opening an AWS account. The root user has access to every AWS service, resource, and piece of data within an AWS account, so it must be handled with extreme care. AWS recommends that the root user isn't used for everyday tasks, and that you protect it with Multi-Factor Authentication (MFA). What should you use the root user for? AWS recommends that you only use the root user to create an initial set of IAM users and groups that will collectively be used to handle your identity management.

AWS provides the ability to federate with existing identity providers using SAML, if you already have another source of record for identity. This will enable you to consistently apply trust to your identities, and limit the need to create duplicate records in AWS IAM.

Another best practice is to implement and enforce strong authentication, including a password policy with a minimum length and complexity for passwords, mandatory rotation, and MFA for any users that require access to the AWS Management Console. For users that require API access, federation tends to be impractical, and in those cases, AWS recommends issuing access key IDs and secret access keys. Permission can then be granted using IAM roles. When issuing access key IDs and secret access keys, take care to store them only in secured locations, and never store them in source control repositories.

Over the years, many people have been bit by careless handling of credentials. There are numerous cases where keys were stored in public GitHub repositories, which are routinely scanned by bad actors. Leaked credentials can result in data being lost or stolen, or massive AWS bills resulting from hackers spinning up large EC2 instances to mine cryptocurrency.

When dealing with service-to-service authentication, AWS recommends taking advantage of AWS Security Token Service (STS) to request temporary, limited-privilege credentials. In addition, you can leverage IAM instance profiles for EC2 instances when IAM roles aren't practical.

Fine-Grained Authorization

Earlier, we introduced the concept of the principle of least privilege, and fine-grained authorization is the mechanism to implement that principle using IAM roles and policies. To have a complete and effective strategy, create and maintain a full list of "principals" (users, groups, services, and roles) that you will use in your AWS account, and then create specific policies that are clearly defined based upon their purpose and requirements.

By ensuring that authenticated identities can only perform the minimal and necessary set of operations to fulfill a task, you limit your "blast radius" in the event that credentials are compromised.

AWS Organizations can also be employed to centrally manage multiple accounts, with the ability to group accounts into organizational units. Service Control Policies (SCPs) can then be used to enforce policies for multiple AWS accounts.

Detective Controls

Detective controls have been a integral part of security since well before the advent of cloud, but in a cloud-centric world, they become an even more essential aspect of protection. Using detective controls, security threats and potential incidents can be avoided entirely. In addition, detective controls enhance governance, compliance, and forensics in the event of an incident.

AWS addresses detective controls in two sections in the Security Pillar Whitepaper: capturing and analyzing logs, and integrating auditing controls with notification and workflow.

Capture and Analyze Logs

Mission has been providing managed infrastructure services for customers for over 17 years, long before the dawn of cloud infrastructure. Aggregating and analyzing logs has always been a part of our business, but the approach has evolved significantly as we moved out of the data center and into the cloud.

The programmatic nature of cloud infrastructure means that listing, auditing, and managing resources is much simpler than it was in the data center. AWS provides rich APIs that can be used to gather accurate, reliable metadata about all of the resources in your environment. In the past, Mission had to resort to manual audits, scans, and agents.

Mission manages hundreds of customers and workloads on AWS, which makes the traditional approach to log aggregation no longer viable. Instead of standing up complex, difficult-to-manage-and-scale log aggregation backends, we can now rely on native services in AWS to handle the hard work for us. Services like Amazon Elasticsearch, Amazon EMR, Amazon CloudWatch Logs, and Amazon S3 coupled with Amazon Athena mean that we can confidently collect and aggregate massive amounts of data with confidence.

AWS recommends leveraging AWS CloudWatch Logs with AWS CloudTrail and other service-specific logging to centralize your data. Having a global store of logs for analysis helps provide a consistency, and allows you to perform queries against logs from various sources in an integrated way. Application and non-service logs can be integrated as well, using agents, syslog, native operating system logs, and other third-party tools. EC2 instances can be managed using AWS OpsWorks, AWS CloudFormation, or EC2 user data to ensure that agents are always installed and properly configured.

Having a centralized, comprehensive store of logs is only useful if it can be quickly and effectively mined for insights. When architecting your applications and workloads, be sure to consider your approach for making that data actionable. AWS provides big data services like Amazon EMR, Amazon Elasticsearch, and Amazon Athena to help you analyze data.

Integrate Auditing Controls with Notification and Workflow

Mission's experience as a Managed Service Provider with hundreds of workloads has driven home the practice of automation and process. With a massive volume of information to sift through to discover anomalies, manual processing is simply not viable. AWS best practice is to provide deep integration for security events and findings into your notification and workflow systems. At Mission, we leverage a ticketing system, along with ChatOps practices, to track and surface insights as they arise. Issues can be automatically or manually escalated, prioritized, and handled directly from our collaboration platform.

Mission also follows the AWS best practice of detecting changes to infrastructure, and integrating those notifications into our workflow. Maintaining secure architectures in the world of on-demand infrastructure requires ongoing, process-driven validation workflows.

AWS CloudWatch Events is a critical service for routing events of interest through appropriate workflows. CloudWatch Events is backed by an extremely powerful and flexible rule engine, which can be used to parse, transform, and route events. At Mission, we make use of AWS Lambda functions and AWS SNS notifications as targets, which help us to automatically notify and remediate.

AWS Config is another critical service to consider, as it enables you to check the configuration of your resources via AWS Config Rules, and then enforce compliance policies, rollback inappropriate changes, and even redirect information to ticketing systems.

Continuous Integration and Continuous Deployment (CI/CD) are a huge part of any DevOps practice, and they should be designed to perform continuous security checks where possible. Minimizing security misconfigurations can be implemented in CI/CD using the Amazon Inspector service, which lets you programmatically find security defects and misconfigurations, including many common vulnerabilities, CVEs, and best practices. By taking this approach, you can build out a workflow that notifies engineering teams in a trackable way in the event that actions need to be taken.

Infrastructure Protection

Infrastructure protection is a broad set of control methodologies that help you meet industry best practices and compliance/regulatory obligations. It's also a critical component for any information security practice, as it helps provide assurances that systems within your workload aren't accessed in an unauthorized way, or vulnerable to breach. Infrastructure protection includes network and host level boundaries, operating system level hardening, and more.

AWS breaks down infrastructure protection into three main categories of approach: protecting network and host-level boundaries, system security configuration and maintenance, and service-level protection enforcement.

Protecting Network and Host-Level Boundaries

Recall that AWS best practice is "security at all layers," and providing isolation via your network is one of the most critical layers. Network topology, design, and management are foundational to isolating and creating boundaries within your environment, and AWS provides tools and APIs to help drive your implementation. There are many security considerations when designing your network, but one fundamental best practice is this: design your network in such a way that only the desired network paths and routing are allowed. In this way, you should consider your network design and configuration another application of the principle of least privilege.

When setting out to design your network, AWS recommends that you start from the outside and move inwards. What parts of your system, such as load balancers, need to be publicly accessible? Do you need connectivity between AWS and your data center via a private network? Given these outer-layer considerations, set up your VPC, including subnets, routing tables, gateways, network ACLs, and security groups to support your requirements, while still providing as much host-level protection as possible.

Implementing this "least privilege" approach to your network requires taking advantage of the rich features that Amazon VPC provides, especially as you consider when to publicly expose subnets via an internet gateway, and how to apply NACLs to strictly define the traffic that is allowed.

Driving down to the host level, be sure to carefully design your security groups using the same principles. Instead of having a single security group per instance, define a richer set of common security groups, with relationships between each other. AWS gives the example of a database tier security group which would ensure that all instances in the tier would only accept traffic from instances within the application tier.

As we discussed last month when reviewing the Reliability Pillar of the Well-Architected Framework, you should use non-overlapping IP addresses when defining VPCs to avoid conflict with your other VPCs or your data centers. In addition, ensure that you apply both inbound and outbound rules to your NACLs.

System Security Configuration and Maintenance

Maintaining secure systems in your environment is a key aspect of applying security at all layers. There are many controls available when considering securing your systems, including OS-level firewalls, virus/malware and CVE/vulnerability scanners, and more. Because we manage thousands of servers on behalf of hundreds of customers, Mission takes a strict automation-driven approach. For example, we leverage Amazon's EC2 Systems Manager service to apply patches across our fleet, and use Chef cookbooks to maintain consistent configurations for both the OS and deployed software.

Automation is also a critical aspect of implementing the principle of least privilege to operators. Mission limits or removes operator access whenever possible, and uses tools from Chef and EC2 Systems Manager to provide operators with the limited access they need. Consider automating routine vulnerability assessment and scans during deployment, and centralize your security findings with Amazon Inspector.

AWS has created an amazingly powerful, agnostic platform for managing your systems with EC2 Systems Manager. Mission makes extensive use of EC2 Systems Manager to manage thousands of instances, and I highly recommend it for implementing security best practices.

Enforcing Service-Level Protection

Workloads deployed on AWS can take advantage of potentially dozens of services. To limit the surface area of attack, service level protections should be implemented for both users' and automated systems' access that apply the principle of least privilege.

AWS IAM is the primary tool used for implementing service-level protection. Design your policies carefully, and regularly audit the policies for accuracy as your workload evolves. Some services provide additional controls that operate at the resource-level, such as Amazon S3 bucket policies. Ensure that you have a complete view of how these policies and controls are used in your environment, so that you can apply a least-privilege methodology.

Data Protection

In the wake of recent high-profile data leaks and the passage and implementation of GDPR, data protection is more important than ever. Architecting secure systems requires a diligent and thorough approach to protecting data, and it all starts with classifying and categorizing data based upon levels of sensitivity. Once data is classified, there are a number of best practices to apply to keep it safe: encryption/tokenization, protection of data at rest, protection of data in transit, and data backup/replication/recovery.

Data Classification

Segmenting and classifying your data enables you to apply best practices and security controls to your data effectively. The goal of data classification is to categorize your data by levels of sensitivity, which requires you first to understand the types of data present in your system, where it will be stored, and how it is required to be accessed. Data classification is not a one-time operation, and your classifications must be maintained over time.

AWS recommends making use of resource tags, IAM policies, AWS KMS, and AWS CloudHSM to implement your policies for data classification, but you can also use third-party and open-source solutions. For example, in one of my applications, I make extensive use of resource tags to classify specific resources that contain highly sensitive data.

Encryption/Tokenization

While often grouped together, encryption and tokenization are actually two distinct schemes for data protection. Encryption creates a representation of content that makes it unreadable without a secret key, while tokenization is a mechanism of generating a "token" that represents a sensitive piece of information (while not containing the information itself). Both can and should be deployed to secure and protect data.

One exemplar use case for tokenization is credit card processing. By using tokens, rather than credit card numbers, you can significantly reduce the burden of compliance and improve the security of the underlying data. The same approach can be applied to other sensitive data, including Personally Identifiable Information (PII) like Social Security Numbers, phone numbers, etc.

The largest challenge when applying encryption to sensitive data is key management. The AWS KMS service is an excellent resource that makes the process much simpler. AWS KMS provides durable, secure, and redundant storage for keys, key-level policies, and more. With AWS CloudHSM, you can take advantage of dedicated hardware security modules (HSMs) which are often required for contractual, corporate, or regulatory compliance.

Finally, when applying tokenization and encryption to your data, consider the data classification model, including the levels of access required to specific data, and any compliance obligations.

Protecting Data at Rest

With AWS, there are a multitude of ways to persist data, including block and object storage, databases, and archives. Protecting this persisted data, or "data at rest," is another layer to consider when architecting secure environments.

AWS KMS is integrated with many AWS services to enable encryption, including Amazon S3 and Amazon Elastic Block Store (EBS). You can even use an encryption key from AWS KMS to encrypt Amazon RDS database instances, including their backups. If you like, you can implement your own encryption-at-rest approach, choosing to encrypt data before storing it.

Finally, when implementing your protections for data at rest, refer back to your data classification model to drive your decisioning.

Protecting Data in Transit

Cloud-native architectures are built from a variety of components and services that must communicate with each other, often exchanging data or references to data that may be sensitive in nature. Data in transit should be protected to ensure overall system security.

AWS services provide HTTPS endpoints for communication, secured by the latest version of Transport Layer Security (TLS). When designing your own services, take advantage of AWS Certificate Manager (ACM), AWS API Gateway, Amazon CloudFront, and Elastic Load Balancing (ELB) to ensure that your services are deployed with encrypted HTTPS endpoints. For systems that must communicate across VPCs or external networks, leverage VPN connectivity to secure the data in transit.

Again, when implementing your protections for data at rest, refer back to your data classification model to drive your decisioning.

Data Backup, Replication, and Recovery

Backup and replication is meant to protect you in the event of a disaster. But, what about your customers? The data contained within your backups and replicas must be protected and secured just as well as primary data.

Amazon S3 boasts 11 nines of durability for objects, and is well-integrated with other AWS services, including Amazon Glacier, which provides a secure, durable, and very low-cost target for data archiving and backup. Amazon RDS can perform snapshots and backups of your databases to S3, and EBS volumes can be snapshotted and copied across Regions. Amazon S3, EBS, and Glacier all support encryption. For additional security, consider storing your backups in a separate AWS account. At Mission, we make use of Lambda functions to create automated secure backups across our fleet.

While ensuring that your backups are secure and protected is critically important, you also must define and implement repeatable processes for recovery. AWS recommends running regular "game day" scenarios to test your backup/recovery strategy.

Incident Response

No matter how well-considered your security practices, you must still put in place a response plan for security incidents, including a mitigation plan. By having your tools, processes, and procedures in place before an incident, you can greatly reduce the impact of security events, and the time to restore operations to a known good state. Moreover, by practicing incident response, your teams will be well prepared to calmly act in the event of an incident.

AWS recommends using resource tagging to to help organize your teams during incident response, flagging resources based upon the sensitivity and classification of their data, the team responsible for mitigation, and other information, you can help your team maintain situational awareness. In addition, these tags can help ensure that the right people get access in a timely fashion to help mitigate, contain, and then perform forensics.

AWS APIs provide the power to automate many tasks, including isolating instances via security groups, removing them from load balancers, and removing compromised instances from auto scaling groups. In addition, you can use EBS snapshots to capture the state of a system under investigation.

One best practice to consider is the implementation of a "Clean Room" approach during incident response. Before a root cause has been identified, it can be very difficult to conduct an investigation because the environment itself is untrusted. By leveraging CloudFormation, a new, trusted environment (a "clean room") can be provisioned to perform deep investigation.

Conclusion

I hope this deep dive into security has inspired you to advocate for a comprehensive, layered approach to security, including applying the principle of least privilege. To learn more about the AWS Well-Architected Framework, check out our webinar on the Security Pillar. By following some of the best practices laid out in the AWS Well-Architected Whitepaper on Security, you can help drive that change in your organization.

As an AWS Well-Architected Review Launch Partner, Mission processes assurance for your AWS infrastructure, processing compliance checks across the five key pillars. Reach out to Mission now to schedule a Well-Architected Review. Additionally, Mission Cloud offers a free tool called Cloud Score to benchmark your environment against the well-architected pillars defining best AWS practices.

FAQ

- How do the principles outlined in the AWS Security Pillar apply to hybrid cloud environments where resources are distributed between AWS and on-premises data centers?

In hybrid cloud environments, the AWS Security Pillar's principles can be extended by ensuring consistent security policies across both AWS and on-premises resources. This involves using AWS services to support hybrid architectures and ensure that on-premises solutions comply with the same security standards in the cloud.

- What are the specific challenges and considerations for applying the principle of least privilege in large organizations with complex access control needs?

Applying the principle of least privilege in large organizations involves carefully defining user roles and responsibilities, using identity and access management tools to enforce access controls, and regularly auditing permissions to ensure they align with current job requirements.

- How does AWS recommend that organizations prepare for and respond to security events in a way that minimizes downtime and data loss?

- AWS recommends preparing for security events by implementing robust monitoring and alerting systems, conducting regular security assessments and drills, and having an incident response plan. This approach helps minimize downtime and data loss by enabling rapid detection and response to security threats.

Author Spotlight:

Jonathan LaCour

Keep Up To Date With AWS News

Stay up to date with the latest AWS services, latest architecture, cloud-native solutions and more.